Installation

The azure exporter is deployed using Terraform on Azure Container App. It uses our Terraform Ocean Integration Factory module to deploy the exporter.

The Azure exporter is initially configured to collect Azure resources from the subscription where it's deployed. However, it can be adjusted to ingest resources from multiple subscriptions. To learn how to configure the Azure exporter for this purpose, check out the instructions in the Multiple subscriptions setup section.

Multiple ways to deploy the Azure exporter could be found in the Azure Integration example README

Azure infrastructure used by the Azure exporter

The Azure exporter uses the following Azure infrastructure:

- Azure Container App;

- Azure Event Grid (Used for real-time data sync to Port):

- Azure Event Grid System Topic of type

Microsoft.Resources.Subscriptions; - Azure Event Grid Subscription;

- Azure Event Grid System Topic of type

Due to a limitation in Azure only one Event Grid system topic of type Microsoft.Resources.Subscriptions can be created per subscription, so if you already have one you'll need to pass it to the integration using event_grid_system_topic_name=<your-event-grid-system-topic-name>.

In case a system topic already exists and is not provided to the deployment of the integration, the integration will due to not being able to create a new one.

Prerequisites

- Terraform >= 0.15.0

- Azure CLI >= 2.26.0

- Permissions

Permissions

In order to successfully deploy the Azure exporter, it's crucial to ensure that the user who deploys the integration in the Azure subscription has the appropriate access permissions. One of the following permission assignments are required:

-

Option 1: the user can have the

OwnerAzure role assigned to him for the subscription that the integration will be deployed on. This role provides comprehensive control and access rights; -

Option 2: for a more limited approach, the user should possess the minimum necessary permissions required to carry out the integration deployment. These permissions will grant the user access to specific resources and actions essential for the task without granting full

Ownerprivileges. The following steps will guide you through the process of creating a custom role and assigning it to the user along with other required roles:-

Create a custom role with the following permissions:

Custom Resource Definition

{

"id": "<ROLE_DEFINITION_ID>",

"properties": {

"roleName": "Azure Exporter Deployment",

"description": "",

"assignableScopes": ["/subscriptions/<SUBSCRIPTION_ID>"],

"permissions": [

{

"actions": [

"Microsoft.ManagedIdentity/userAssignedIdentities/read",

"Microsoft.ManagedIdentity/userAssignedIdentities/write",

"Microsoft.ManagedIdentity/userAssignedIdentities/assign/action",

"Microsoft.ManagedIdentity/userAssignedIdentities/listAssociatedResources/action",

"Microsoft.Authorization/roleDefinitions/read",

"Microsoft.Authorization/roleDefinitions/write",

"Microsoft.Authorization/roleAssignments/write",

"Microsoft.Authorization/roleAssignments/read",

"Microsoft.Resources/subscriptions/resourceGroups/write",

"Microsoft.OperationalInsights/workspaces/tables/write",

"Microsoft.Resources/deployments/read",

"Microsoft.Resources/deployments/write",

"Microsoft.OperationalInsights/workspaces/read",

"Microsoft.OperationalInsights/workspaces/write",

"microsoft.app/containerapps/write",

"microsoft.app/managedenvironments/read",

"microsoft.app/managedenvironments/write",

"Microsoft.Resources/subscriptions/resourceGroups/read",

"Microsoft.OperationalInsights/workspaces/sharedkeys/action",

"microsoft.app/managedenvironments/join/action",

"microsoft.app/containerapps/listsecrets/action",

"microsoft.app/containerapps/delete",

"microsoft.app/containerapps/stop/action",

"microsoft.app/containerapps/start/action",

"microsoft.app/containerapps/authconfigs/write",

"microsoft.app/containerapps/authconfigs/delete",

"microsoft.app/containerapps/revisions/restart/action",

"microsoft.app/containerapps/revisions/activate/action",

"microsoft.app/containerapps/revisions/deactivate/action",

"microsoft.app/containerapps/sourcecontrols/write",

"microsoft.app/containerapps/sourcecontrols/delete",

"microsoft.app/managedenvironments/delete",

"Microsoft.Authorization/roleAssignments/delete",

"Microsoft.Authorization/roleDefinitions/delete",

"Microsoft.OperationalInsights/workspaces/delete",

"Microsoft.ManagedIdentity/userAssignedIdentities/delete",

"Microsoft.Resources/subscriptions/resourceGroups/delete"

],

"notActions": [],

"dataActions": [],

"notDataActions": []

}

]

}

} -

Assign the following roles to the user on the subscription that will be used to deploy the integration:

- The custom

Azure Exporter Deploymentrole we defined above. - The

API Management Workspace Contributorrole. - The

EventGrid Contributorrole. - The

ContainerApp Readerrole. - The

EventGrid EventSubscription Contributorrole.

- The custom

-

Installation

Follow this guide to create a service principal in order to get your Azure account credentials:

- AZURE_CLIENT_ID

- AZURE_CLIENT_SECRET

- AZURE_TENANT_ID

- AZURE_SUBSCRIPTION_ID

Or register an app in the Azure portal to get your credentials.

- Real Time & Always On

- Scheduled

Using this installation option means that the integration will be able to update Port in real time using webhooks.

This table summarizes the available parameters for the installation. Set them as you wish in the script below, then copy it and run it in your terminal:

| Parameter | Description | Example | Required |

|---|---|---|---|

port.clientId | Your port client id | ✅ | |

port.clientSecret | Your port client secret | ✅ | |

port.baseUrl | Your Port API URL - https://api.getport.io for EU, https://api.us.getport.io for US | ✅ | |

integration.config.appHost | The host of the Port Ocean app. Used to set up the integration endpoint as the target for webhooks | https://my-ocean-integration.com | ❌ |

Advanced configuration

| Parameter | Description |

|---|---|

integration.eventListener.type | The event listener type. Read more about event listeners |

integration.type | The integration to be installed |

scheduledResyncInterval | The number of minutes between each resync. When not set the integration will resync for each event listener resync event. Read more about scheduledResyncInterval |

initializePortResources | Default true, When set to true the integration will create default blueprints and the port App config Mapping. Read more about initializePortResources |

- Terraform

- Helm

- ArgoCD

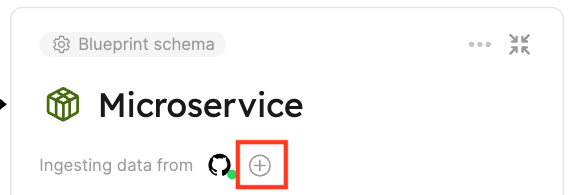

- Login to Port and browse to the builder page

- Open the ingest modal by expanding one of the blueprints and clicking the ingest button on the blueprints.

-

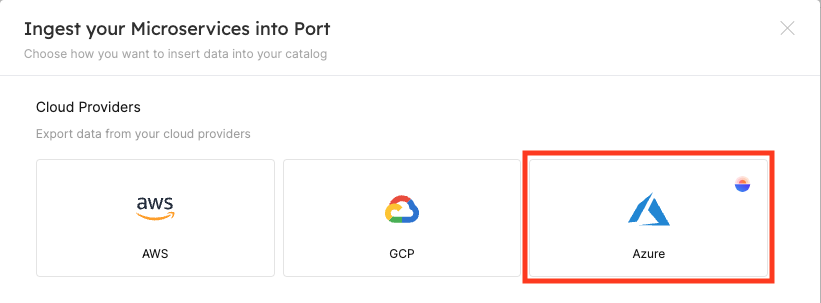

Click on the Azure Exporter option under the Cloud Providers section:

-

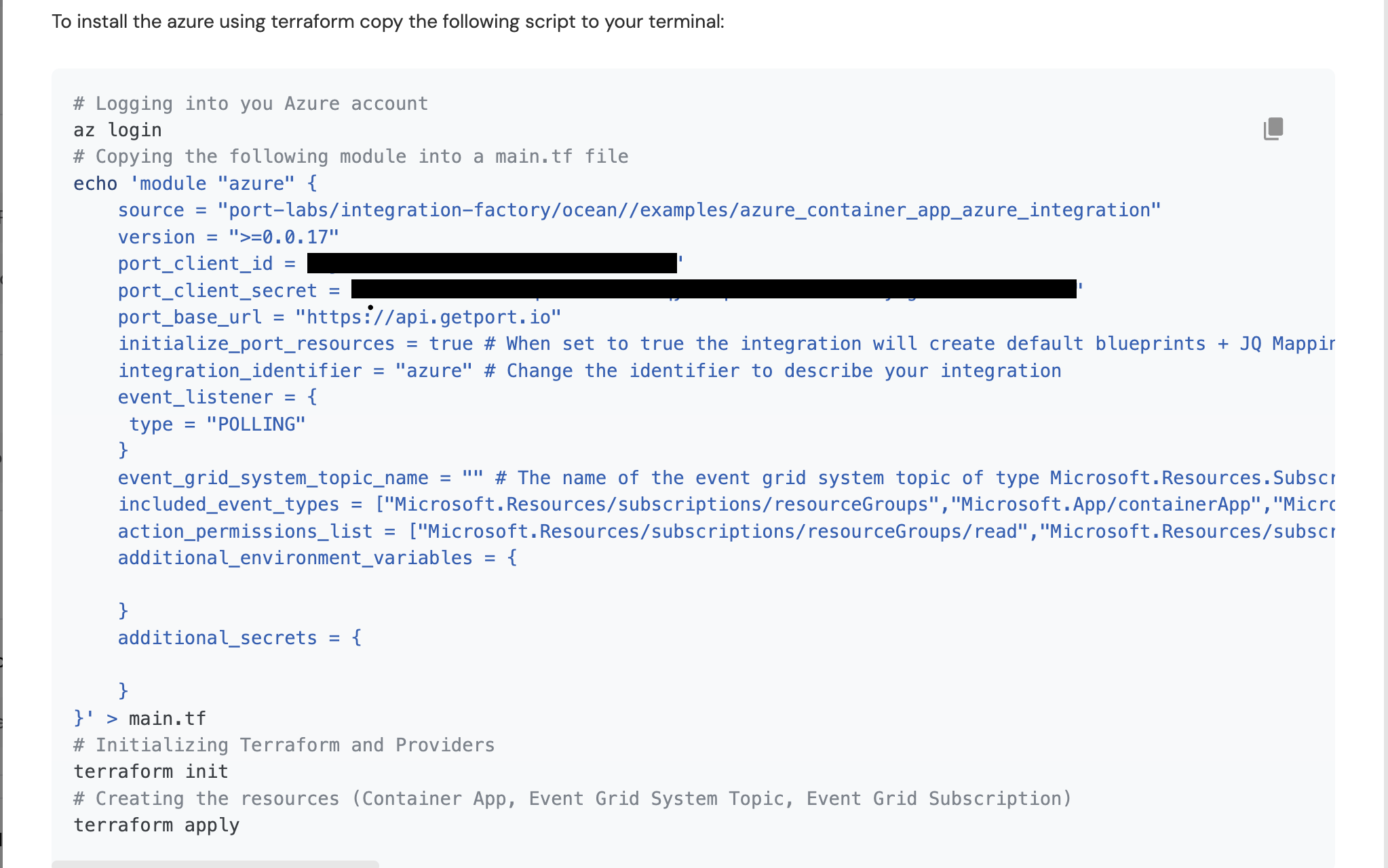

Edit and copy the installation command.

tipThe installation command includes placeholders that allow you to customize the integration's configuration. For example, you can update the command and specify the

event_grid_system_topic_nameparameter if you already have one.- Specify the

event_grid_system_topic_nameparameter if you already have an Event Grid system topic of typeMicrosoft.Resources.Subscriptionsin your subscription; - Specify the

event_grid_event_filter_listparameter if you want to listen to more events; - Specify the

action_permissions_listparameter if you want the integration to have more permissions.

- Specify the

-

Run the command in your terminal to deploy the Azure exporter.

To install the integration using Helm, run the following command:

helm repo add --force-update port-labs https://port-labs.github.io/helm-charts

helm upgrade --install my-azure-integration port-labs/port-ocean \

--set port.clientId="PORT_CLIENT_ID" \

--set port.clientSecret="PORT_CLIENT_SECRET" \

--set port.baseUrl="https://api.getport.io" \

--set initializePortResources=true \

--set scheduledResyncInterval=60 \

--set integration.identifier="my-azure-integration" \

--set integration.type="azure" \

--set integration.eventListener.type="POLLING" \

--set "extraEnv[0].name=AZURE_CLIENT_ID" \

--set "extraEnv[0].value=xxxx-your-client-id-xxxxx" \

--set "extraEnv[1].name=AZURE_CLIENT_SECRET" \

--set "extraEnv[1].value=xxxxxxx-your-client-secret-xxxx" \

--set "extraEnv[2].name=AZURE_TENANT_ID" \

--set "extraEnv[2].value=xxxx-your-tenant-id-xxxxx" \

--set "extraEnv[3].name=AZURE_SUBSCRIPTION_ID" \

--set "extraEnv[3].value=xxxx-your-subscription-id-xxxxx"

The baseUrl, port_region, port.baseUrl, portBaseUrl, port_base_url and OCEAN__PORT__BASE_URL parameters are used to select which instance or Port API will be used.

Port exposes two API instances, one for the EU region of Port, and one for the US region of Port.

- If you use the EU region of Port, available at https://app.getport.io, your Port API URL is

https://api.getport.io - If you use the US region of Port, available at https://app.us.getport.io, your Port API URL is

https://api.us.getport.io

To install the integration using ArgoCD, follow these steps:

- Create a

values.yamlfile inargocd/my-ocean-azure-integrationin your git repository with the content:

initializePortResources: true

scheduledResyncInterval: 120

integration:

identifier: my-ocean-azure-integration

type: azure

eventListener:

type: POLLING

extraEnvs:

- name: AZURE_CLIENT_ID

value: xxxx-your-client-id-xxxxx

- name: AZURE_CLIENT_SECRET

value: xxxxxxx-your-client-secret-xxxx

- name: AZURE_TENANT_ID

value: xxxx-your-tenant-id-xxxxx

- name: AZURE_SUBSCRIPTION_ID

value: xxxx-your-subscription-id-xxxxx

- Install the

my-ocean-azure-integrationArgoCD Application by creating the followingmy-ocean-azure-integration.yamlmanifest:

Remember to replace the placeholders for YOUR_PORT_CLIENT_ID YOUR_PORT_CLIENT_SECRET and YOUR_GIT_REPO_URL.

Multiple sources ArgoCD documentation can be found here.

ArgoCD Application

apiVersion: argoproj.io/v1alpha1

kind: Application

metadata:

name: my-ocean-azure-integration

namespace: argocd

spec:

destination:

namespace: mmy-ocean-azure-integration

server: https://kubernetes.default.svc

project: default

sources:

- repoURL: 'https://port-labs.github.io/helm-charts/'

chart: port-ocean

targetRevision: 0.1.14

helm:

valueFiles:

- $values/argocd/my-ocean-azure-integration/values.yaml

parameters:

- name: port.clientId

value: YOUR_PORT_CLIENT_ID

- name: port.clientSecret

value: YOUR_PORT_CLIENT_SECRET

- name: port.baseUrl

value: https://api.getport.io

- repoURL: YOUR_GIT_REPO_URL

targetRevision: main

ref: values

syncPolicy:

automated:

prune: true

selfHeal: true

syncOptions:

- CreateNamespace=true

The baseUrl, port_region, port.baseUrl, portBaseUrl, port_base_url and OCEAN__PORT__BASE_URL parameters are used to select which instance or Port API will be used.

Port exposes two API instances, one for the EU region of Port, and one for the US region of Port.

- If you use the EU region of Port, available at https://app.getport.io, your Port API URL is

https://api.getport.io - If you use the US region of Port, available at https://app.us.getport.io, your Port API URL is

https://api.us.getport.io

- Apply your application manifest with

kubectl:

kubectl apply -f my-ocean-azure-integration.yaml

- GitHub

- Jenkins

- Azure Devops

This workflow will run the Azure integration once and then exit, this is useful for scheduled ingestion of data.

If you want the integration to update Port in real time using webhooks you should use the Real Time & Always On installation option

Make sure to configure the following Github Secrets:

| Parameter | Description | Required |

|---|---|---|

OCEAN__PORT__CLIENT_ID | Your port client id | ✅ |

OCEAN__PORT__CLIENT_SECRET | Your port client secret | ✅ |

OCEAN__PORT__BASE_URL | Your Port API URL - https://api.getport.io for EU, https://api.us.getport.io for US | ✅ |

OCEAN__SECRET__AZURE_CLIENT_ID | Your Azure client ID | ✅ |

OCEAN__SECRET__AZURE_CLIENT_SECRET | Your Azure client secret | ✅ |

OCEAN__SECRET__AZURE_TENANT_ID | Your Azure tenant ID | ✅ |

OCEAN__SECRET__AZURE_SUBSCRIPTION_ID | Your Azure Subscription ID | ✅ |

OCEAN__INITIALIZE_PORT_RESOURCES | Default true, When set to false the integration will not create default blueprints and the port App config Mapping | ❌ |

OCEAN__INTEGRATION__IDENTIFIER | Change the identifier to describe your integration, if not set will use the default one | ❌ |

Here is an example for azure-integration.yml workflow file:

name: Azure Exporter Workflow

# This workflow responsible for running Azure exporter.

on:

workflow_dispatch:

jobs:

run-integration:

runs-on: ubuntu-latest

steps:

- uses: port-labs/ocean-sail@v1

env:

AZURE_CLIENT_ID: ${{ secrets.OCEAN__SECRET__AZURE_CLIENT_ID }}

AZURE_CLIENT_SECRET: ${{ secrets.OCEAN__SECRET__AZURE_CLIENT_SECRET }}

AZURE_TENANT_ID: ${{ secrets.OCEAN__SECRET__AZURE_TENANT_ID }}

AZURE_SUBSCRIPTION_ID: ${{ secrets.OCEAN__SECRET__AZURE_SUBSCRIPTION_ID }}

with:

type: "azure"

identifier: "my-azure-integration"

port_client_id: ${{ secrets.OCEAN__PORT_CLIENT_ID }}

port_client_secret: ${{ secrets.OCEAN__PORT_CLIENT_SECRET }}

port_base_url: https://api.getport.io

This pipeline will run the Azure integration once and then exit, this is useful for scheduled ingestion of data.

Your Jenkins agent should be able to run docker commands.

If you want the integration to update Port in real time using webhooks you should use the Real Time & Always On installation option.

Make sure to configure the following Jenkins Credentials

of Secret Text type:

| Parameter | Description | Required |

|---|---|---|

OCEAN__PORT__CLIENT_ID | Your port client id | ✅ |

OCEAN__PORT__CLIENT_SECRET | Your port client secret | ✅ |

OCEAN__PORT__BASE_URL | Your Port API URL - https://api.getport.io for EU, https://api.us.getport.io for US | ✅ |

OCEAN__SECRET__AZURE_CLIENT_ID | Your Azure client ID | ✅ |

OCEAN__SECRET__AZURE_CLIENT_SECRET | Your Azure client secret | ✅ |

OCEAN__SECRET__AZURE_TENANT_ID | Your Azure tenant ID | ✅ |

OCEAN__SECRET__AZURE_SUBSCRIPTION_ID | Your Azure Subscription ID | ✅ |

OCEAN__INITIALIZE_PORT_RESOURCES | Default true, When set to false the integration will not create default blueprints and the port App config Mapping | ❌ |

OCEAN__INTEGRATION__IDENTIFIER | Change the identifier to describe your integration, if not set will use the default one | ❌ |

Here is an example for Jenkinsfile groovy pipeline file:

pipeline {

agent any

stages {

stage('Run Azure Integration') {

steps {

script {

withCredentials([

string(credentialsId: 'OCEAN__PORT__CLIENT_ID', variable: 'OCEAN__PORT__CLIENT_ID'),

string(credentialsId: 'OCEAN__PORT__CLIENT_SECRET', variable: 'OCEAN__PORT__CLIENT_SECRET'),

string(credentialsId: 'OCEAN__SECRET__AZURE_CLIENT_ID', variable: 'OCEAN__SECRET__AZURE_CLIENT_ID'),

string(credentialsId: 'OCEAN__SECRET__AZURE_CLIENT_SECRET', variable: 'OCEAN__SECRET__AZURE_CLIENT_SECRET'),

string(credentialsId: 'OCEAN__SECRET__AZURE_TENANT_ID', variable: 'OCEAN__SECRET__AZURE_TENANT_ID'),

string(credentialsId: 'OCEAN__SECRET__AZURE_SUBSCRIPTION_ID', variable: 'OCEAN__SECRET__AZURE_SUBSCRIPTION_ID'),

]) {

sh('''

#Set Docker image and run the container

integration_type="azure"

version="latest"

image_name="ghcr.io/port-labs/port-ocean-${integration_type}:${version}"

docker run -i --rm --platform=linux/amd64 \

-e OCEAN__EVENT_LISTENER='{"type":"ONCE"}' \

-e OCEAN__INITIALIZE_PORT_RESOURCES=true \

-e OCEAN__PORT__CLIENT_ID=$OCEAN__PORT__CLIENT_ID \

-e OCEAN__PORT__CLIENT_SECRET=$OCEAN__PORT__CLIENT_SECRET \

-e OCEAN__PORT__BASE_URL='https://api.getport.io' \

-e AZURE_CLIENT_ID=$OCEAN__SECRET__AZURE_CLIENT_ID \

-e AZURE_CLIENT_SECRET=$OCEAN__SECRET__AZURE_CLIENT_SECRET \

-e AZURE_TENANT_ID=$OCEAN__SECRET__AZURE_TENANT_ID \

-e AZURE_SUBSRIPTION_ID=$OCEAN__SECRET__AZURE_SUBSCRIPTION_ID

$image_name

exit $?

''')

}

}

}

}

}

}

This pipeline will run the Azure integration once and then exit, this is useful for scheduled ingestion of data.

Your Azure Devops agent should be able to run docker commands. Learn more about agents here.

If you want the integration to update Port in real time using webhooks you should use the Real Time & Always On installation option.

Variable groups store values and secrets you'll use in your pipelines across your project. Learn more

Setting Up Your Credentials

- Create a Variable Group: Name it port-ocean-credentials. Store the required variables from the table.

- Authorize Your Pipeline:

- Go to "Library" -> "Variable groups."

- Find port-ocean-credentials and click on it.

- Select "Pipeline Permissions" and add your pipeline to the authorized list.

| Parameter | Description | Required |

|---|---|---|

OCEAN__PORT__CLIENT_ID | Your port client id | ✅ |

OCEAN__PORT__CLIENT_SECRET | Your port client secret | ✅ |

OCEAN__PORT__BASE_URL | Your Port API URL - https://api.getport.io for EU, https://api.us.getport.io for US | ✅ |

OCEAN__SECRET__AZURE_CLIENT_ID | Your Azure client ID | ✅ |

OCEAN__SECRET__AZURE_CLIENT_SECRET | Your Azure client secret | ✅ |

OCEAN__SECRET__AZURE_TENANT_ID | Your Azure tenant ID | ✅ |

OCEAN__SECRET__AZURE_SUBSCRIPTION_ID | Your Azure Subscription ID | ✅ |

OCEAN__INITIALIZE_PORT_RESOURCES | Default true, When set to false the integration will not create default blueprints and the port App config Mapping | ❌ |

OCEAN__INTEGRATION__IDENTIFIER | Change the identifier to describe your integration, if not set will use the default one | ❌ |

Here is an example for azure-integration.yml pipeline file:

trigger:

- main

pool:

vmImage: "ubuntu-latest"

variables:

- group: port-ocean-credentials

steps:

- script: |

# Set Docker image and run the container

integration_type="azure"

version="latest"

image_name="ghcr.io/port-labs/port-ocean-$integration_type:$version"

docker run -i --rm --platform=linux/amd64 \

-e OCEAN__EVENT_LISTENER='{"type":"ONCE"}' \

-e OCEAN__INITIALIZE_PORT_RESOURCES=true \

-e OCEAN__PORT__CLIENT_ID=$(OCEAN__PORT__CLIENT_ID) \

-e OCEAN__PORT__CLIENT_SECRET=$(OCEAN__PORT__CLIENT_SECRET) \

-e OCEAN__PORT__BASE_URL='https://api.getport.io' \

-e AZURE_CLIENT_ID=$(OCEAN__SECRET__AZURE_CLIENT_ID) \

-e AZURE_CLIENT_SECRET=$(OCEAN__SECRET__AZURE_CLIENT_SECRET) \

-e AZURE_TENANT_ID=$(OCEAN__SECRET__AZURE_TENANT_ID) \

-e AZURE_SUBSRIPTION_ID=$(OCEAN__SECRET__AZURE_SUBSCRIPTION_ID)

$image_name

exit $?

displayName: "Ingest Data into Port"

The baseUrl, port_region, port.baseUrl, portBaseUrl, port_base_url and OCEAN__PORT__BASE_URL parameters are used to select which instance or Port API will be used.

Port exposes two API instances, one for the EU region of Port, and one for the US region of Port.

- If you use the EU region of Port, available at https://app.getport.io, your Port API URL is

https://api.getport.io - If you use the US region of Port, available at https://app.us.getport.io, your Port API URL is

https://api.us.getport.io

Multiple subscriptions setup

To configure the Azure exporter to ingest resources from other subscriptions, you'll need to assign permissions to the managed identity running the integration in the subscriptions which you wish to ingest resources from.

- Head to the Azure portal and navigate to the subscription you want to ingest resources from.

- In the subscription's

Access control (IAM)section, go to the Role assignment tab and choose the appropriate role for the managed identity responsible for the integration. - Assign this role to the managed identity associated with the integration.

- Repeat this process for each subscription you wish to include.

For real-time data ingestion from multiple subscriptions, set up an Event Grid System Topic and an Event Grid Subscription in each subscription you want to include, connecting them to the Azure exporter.

For a detailed example using Terraform to configure the Event Grid System Topic and Event Grid Subscription, based on the installation output of the Azure exporter, refer to this example)

Further information

- Refer to the examples page for practical configurations and their corresponding blueprint definitions.